9 Things Only iPhone Power Users Take Advantage of on Their Devices

For many, it’s difficult to imagine life before smartphones.

At the same time, it’s hard to believe that the original Apple iPhone, considered a genuine unicorn at the time thanks to its superior experience and stunning, rainbow-worthy display, released over 10 years ago.

Even though the iPhone is older than most grade school students, some of its capabilities remain a mystery to the masses.

Sure, we all hear about the latest, greatest features, but what about those lingering in the background just waiting to be discovered?

Getting your hands wrapped around those capabilities is what separates you, a soon-to-be power user, from those who haven’t truly unleashed its full potential.

So, what are you waiting for? Release that unicorn and let it run free like the productivity powerhouse it was always meant to be.

Here are 9 ways to get started.

1. Get Back Your Closed Tabs

We’ve all done it. While moving between tabs or screens, our fingers tap the little “x” and close an important browser tab.

With the iPhone, all is not lost. You can get that epic unicorn meme back from oblivion!

The included Safari browser makes recovering a recently closed tab a breeze. Learn more about the process here: Reopen Tabs

2. Smarter Photo Searching

Searching through photos hasn’t always been the most intuitive process…until now.

Before, you had to rely on labels and categories to support search functions. But now, thanks to new machine learning supported features, the photos app is more powerful than ever.

The iPhone has the ability to recognize thousands of objects, regardless of whether you’ve identified them. That means you can search using keywords to find images with specific items or those featuring a particular person.

Just put the keyword in the search box and let the app do the hard part for you.

3. Find Out Who’s Calling

Sometimes, you can’t simply look at your iPhone’s screen to see who’s calling. Maybe you are across the room, are driving down the road, or have the phone safely secured while jogging.

Regardless of the reason, just grabbing it quickly isn’t an option. But that doesn’t mean you want to sprint across the room, pull your car over, or stop your workout just to find out it’s a robo-dial.

Luckily, you can avoid this conundrum by setting up Siri to announce who’s calling. Then you’ll always know if you actually want to stop what you’re doing to answer before you break away from the task at hand.

See how here: Siri Announce Calls

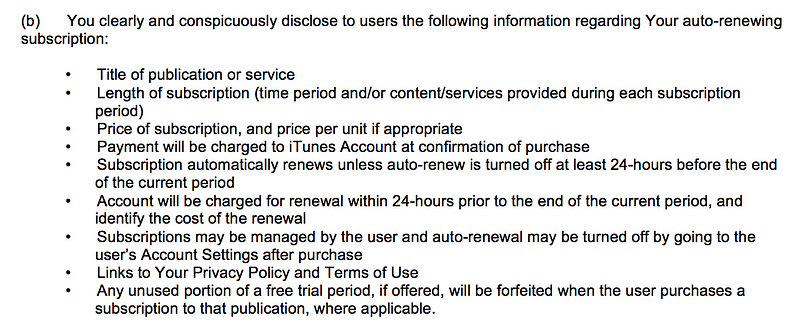

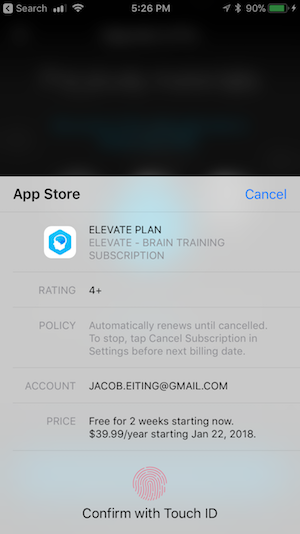

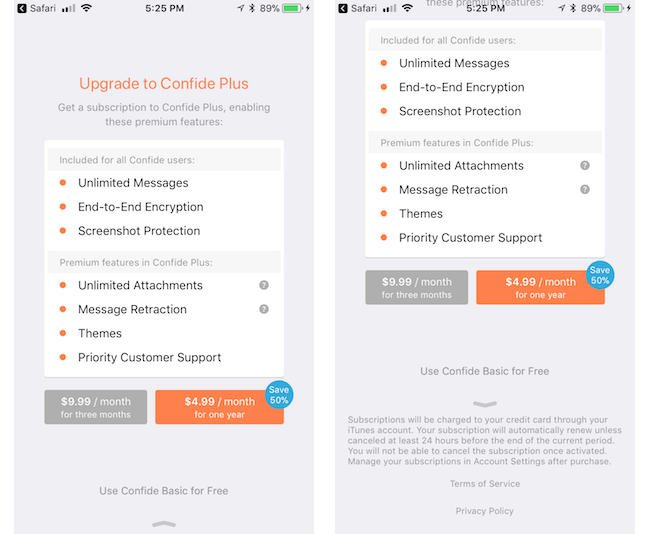

4. Stop Squinting to Read Fine Print

In the business world, fine print is the donkey we all face on a regular basis. You can’t sign up for a service or look over a contract without facing some very small font sizes.

Thanks to the iPhone, you don’t have to strain your eyes (and likely give yourself a headache) to see everything you need to see when faced with fine print on paper. Just open the Magnifier, and your camera is now a magnifying glass.

See how it’s done here: Magnifier

5. Clear Notifications En Masse

Yes, notifications can be great. They let you know what’s happening without having to open every app individually.

But, if you haven’t tended to your iPhone for a while, they can also pile up quick. And who has the time to handle a huge listed of notifications one at a time?

iPhone’s that featured 3D Touch (iPhone 6S or newer) actually have the ability to let you clean all of your notifications at once.

Clear out here screen by following the instructions here: Clear Notifications

6. Close Every Safari Tab Simultaneously

iPhones running iOS 10 can support an “unlimited” number of Safari tabs at once. While this is great if you like keeping a lot of sites open, it can also get out of hand really quickly if you don’t formally close the ones you don’t need.

If you have more tabs open than stars in the sky, you can set yourself free and close them all at once.

To take advantage of this virtual reset, see the instructions here: Close All Safari Tabs

7. Request Desktop Site

While mobile sites are handy for the optimized experience, they can also be very limiting. Not every mobile version has the features you need to get things done, but requesting the desktop version wasn’t always the easiest process.

Now, you can get to the full desktop site with ease. Just press and hold on the refresh button at the top of the browser screen, and you’ll be given the option to request the desktop site.

8. Get a Trackpad for Email Cursor Control

There you are, doing the daily task of writing out emails or other long messages. As you go along, you spot it; it’s a mistake a few sentences back.

Trying to use a touchscreen to get back to the right place isn’t always easy, especially if the error rests near the edge of the screen.

Now, anyone with a 3D Touch enabled device can leave that frustration in the past. The keyboard can now be turned into a trackpad, giving you the cursor control you’ve always dreamed of having, the equivalent of finding a unicorn at the end of a rainbow.

Learn how here: Keyboard Trackpad

9. Force Close an Unresponsive App

If a single app isn’t doing its job, but the rest of your phone is operating fine, you don’t have to restart your phone to get the app back on track.

Instead, you can force close the unresponsive app through the multitasking view associated with recently used apps that are sitting in standby mode.

Check out how it’s done here: Force Close an App

Be a Unicorn in a Sea of Donkeys

Get my very best Unicorn marketing & entrepreneurship growth hacks.