Unlocking Web Audio — the smarter way

The Web Audio API

provides a powerful and versatile system for controlling audio on the

Web, allowing developers to choose audio sources, add effects to audio,

create audio visualizations, apply spatial effects (such as panning) and

much more.

It

can be used for developing sophisticated web-based games or interactive

applications which include the capabilities of modern game audio

engines as well as some of the mixing, processing, and filtering tasks

found in modern desktop audio production applications; and it can be a

very good complement to the more advanced graphics features offered by WebGL.

All this sounds awesome (pun intended), although, on iOS devices there is a slight caveat:

the Web Audio API requires sounds to be triggered from an explicit user

action, such as a tap, before any sound can be played on a web page.

The

reason why iOS devices impose this user-gesture restriction is because

they want to preserve a pleasant user experience by preventing ads or

videos from playing sounds before user actually interacts with the

content, and also to save battery life since playing audio does require

additional processing power.

Within Web Audio API everything happens inside of an

AudioContext,

an audio-processing graph built from audio nodes linked together, which

controls both the creation of the nodes it contains and the execution

of the audio processing, or decoding.

On

iOS devices this context is initially suspended (“locked”) and in order

to enable sounds on a web page we need to get it out of the suspended

state, or “unlock” it, within first user interaction.

Earlier solution to this issue proposed creating an empty sound on the fly and playing it back once user interacts with the page.

The reason why this approach works is because playing an audio source within a locked audio context sets it into

running state before actually playing the audio.

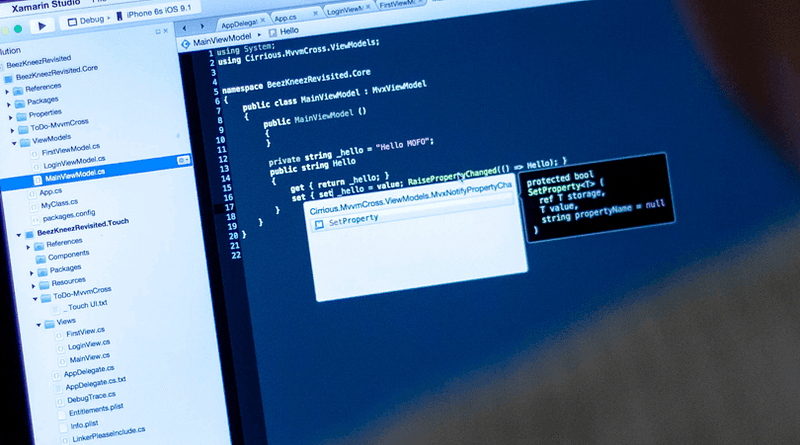

Let’s

start putting some code together in order to try and come up with a

simpler solution. First we need to instantiate an audio context to be

able to do any audio manipulation:

Then right after we create an audio context we should check its state property.

If the state is equal to

If the state is equal to

suspended we might be dealing with an iOS device:

Some desktop browsers like Firefox Quantum leave an audio context in

suspended

state right after instantiating it, so in order to make sure we are

really dealing with an iOS device we need to check if touch events are

available as well:

If the audio context is suspended we can call its

resume() method to set it into running state:

Now, you might be thinking “well, it can’t be this simple…”

…and

you are right 😔. This code needs to be run within a user interaction

in order to actually work. So we are going to wrap it up in a function

and add that function as a touch event listener.

In order to try and unlock audio context as soon as possible we will run our code on the first

touchstart event that occurs anywhere on the page:

One issue with this approach is that with some iOS versions trying to unlock Web Audio on

touchstart event doesn’t work.

touchstart

could be the beginning of a scroll gesture, and playing audio during

this gesture could be completely undesirable from a user's perspective.

So to take this into account we also need to try to unlock Web Audio from within

touchend event as well as on the initial touchstart event:

Once

the audio context has been unlocked we would want to remove touch event

listeners since we don’t need them anymore. For this we need to figure

out when the audio context was actually unlocked.

Technique used in an earlier solution to determine this was to query the

Then, if the sound was in

playbackState

attribute of the sound, that was created on the fly, shortly after

starting its playback. Doing it directly after wouldn’t work since

starting a sound is an asynchronous action so querying had to be done

from a timeout callback.Then, if the sound was in

PLAYING_STATE or FINISHED_STATE, you could assume that the context was unlocked.

This approach might still work in some cases but it is far from simple and introduces a compatibility issue since

playbackState is no longer supported by the official Web Audio API specification.

One neat thing is that

resume method of the audio context actually returns a Promise

object. When that promise resolves we are certain that audio context

has been unlocked and that we can remove touch event listeners:

And there you go: a bulletproof way of unlocking Web Audio on iOS devices!

Let’s make a few more adjustments to make this piece of code reusable. First let’s wrap it all in a function and put it in a

try...catch block in order to prevent any unexpected errors from breaking our entire app:

And

finally let’s provide some useful info like if there was an actual need

to unlock Web Audio or if an error occurred and what was the reason for

it. We can do this by wrapping everything inside of a

Promise and returning it from our function:

Now you can use this function anywhere in your code like this: