The Apple Battery Cover-Up: Triumph of Management, Failure of Leadership

This is a difficult post for me to write. It’s a post about Apple — yet it’s not the same Apple where I spent 22 years of my career. It’s also a post about competent management — and, the utter failure of leadership.starti

You’ve

probably seen the headlines by now. Apple recently rolled out an update

that slows down older phones, ostensibly in an effort to preserve the

life of aging batteries.

The thing is, Apple didn’t tell

anyone that this was happening; a lot of iPhone users upgraded to newer

models, when they could have simply bought new batteries — a much

smaller financial investment — and continued to use their old phones.

It’s

been a public relations nightmare, with multiple class action suits

already filed. And Apple’s solution to the problem has been to

apologize — rather feebly, and only after the whole thing was uncovered

by a Reddit user — and knock down the battery replacement cost to $29.

(It normally runs about $79.)

This is unbelievable to me.

When

I was at Apple in the early 2000s, I ran into a somewhat similar

problem, albeit on a much smaller scale. About 800 iBooks (yes there was

actual hardware called an iBook), all of them in university settings,

started exhibiting problems with their CD trays.

We acted quickly, and replaced every single one of those 800 units, no questions asked.

I know for a fact that we lost a couple of customers to Microsoft over this. I also know that we did the right thing. We were proud to have done the right thing. And most of our customers appreciated it.

Even

with this slight inconvenience, they felt good about how we were

treating them. Our response to the hardware malfunction enhanced our

brand and our reputation.

Again: The Apple you’re reading about today is not the same company I worked for all those 22 years.

I can think of so many better ways they could have handled this:

1. The best solution

would have been to just be upfront with customers in the first place.

Say, “Hey, we’re glad you enjoy your old-school iPhone, but you’re going

to be left behind; in order to download the latest iOS updates, you

need to upgrade to a newer device.”

This kind of thing is, of course, totally

normal in the tech world; you can’t run the latest macOS on an older

MacBook any more than you can run the latest version of Windows on a

1980s PC. Tech changes, and eventually goes obsolete.

2. Another solution?

In response to the aging battery issue, offer a coupon to those

old-school iPhone users, giving them 50 percent off an iPhone 8. This is

a feel-good solution — a new phone for a fraction of the price! Plus, it gets people into the Apple Store, and makes them actually happy.

3.

Apple could even have offered to replace those old batteries in the

store, free of charge — an inconvenient and cumbersome solution, but at

least it would have shown some real customer service initiative. And

again, it would generate traffic to the Apple Store and an opportunity

to upgrade. Has everyone forgotten about the traffic conversion factor?

Any of those solutions would have been preferable to Apple’s secretive software upgrade — which, again, we only know about through social media users, not because Apple was forthcoming about it — to say nothing of its lame apology and its trifling $29 battery offer.

Here

I might note that, according to some of my sources on the inside, the

actual cost of a battery is in the single digits — so the fact that

Apple is still making people

pay $29 for a new one, in the face of a major PR scandal and with $200

billion in the reserves, is absolutely stunning.

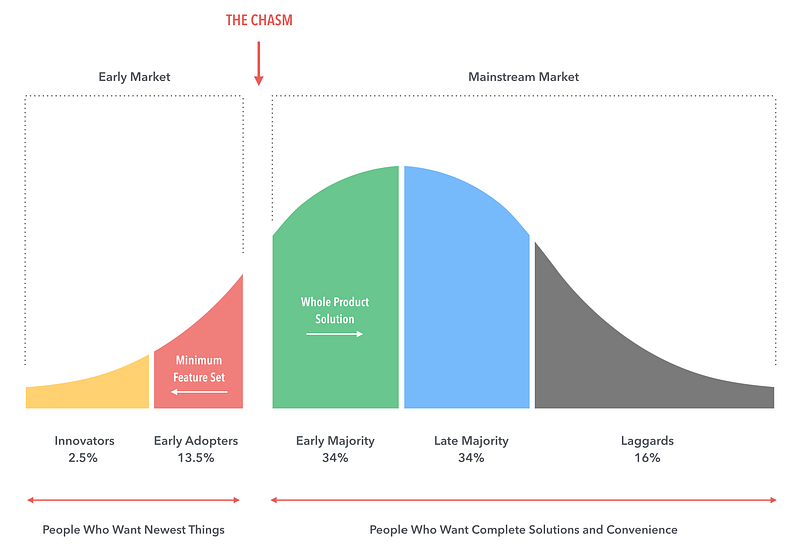

Sure: In the short term, Apple’s saving a few bucks. That’s because the company is managing this problem well.

Managing

a problem means getting through it with minimum trouble to the company.

It involves a focus on numbers and accounting, but a short-sightedness

when it comes to relationships and customer goodwill.

Instead of managing the problem, Apple should be leading it — not doing the bare minimum to save its neck, but doing the right thing, taking pride in doing the right thing, and trusting that customers will appreciate it. That’s what leadership means.

In

other words, Apple should be thinking a few steps ahead, and realizing

that a few bucks for free battery replacements (or discounted iPhone

upgrades) mean nothing compared to the loss of goodwill the company now faces.

Goodwill (or relationships, when you get right down to it) is the most precious commodity it or any other company has. And Apple is squandering it.

And

that’s to say nothing of the lack of communication here — as if Apple’s

executives don’t know the old political adage, that the cover-up is

always worse than the deed.

This

whole episode may be seen as a turning point for Apple — its real

transition from Steve’s company into Tim’s. Tim Cook is a great manager, and he’s certainly managing this situation ably.